Class 14: Machine Learning 1 - General#

Goals of today’s class:

Understand, at a high level, how to train and evaluate a classifier.

Implement some classifiers (that don’t involve neural networks) on a network dataset.

Experiment with tweaking classifier type, hyperparameters, and training data size. Reason about how this impacts performance.

Come in. Sit down. Open Teams.

Find your notebook in your /Class_14/ folder.

Today’s Dataset#

The network you’re about to look at is a German language network of Twitch streamers. Each node represents a user, and an edge between them means that they have a mutual friendship (so this is an undirected network). We also have some limited data about each user’s account. There’s a binary classification task for this dataset that involves predicting whether or not a streamer uses explicit language.

import json

import pandas as pd

import networkx as nx

G = nx.read_edgelist(open('./data/twitch/DE/musae_DE_edges.csv', 'rb'), delimiter=',', nodetype=int) # edges between users

# has info about each account plus binary indicators of whether a streamer uses explicit language

df_attrs_explicit = pd.read_csv('./data/twitch/DE/musae_DE_target.csv')

attrs = {

a['new_id']: {

'days': a['days'],

'views': a['views'],

'partner': a['partner']

} for a in df_attrs_explicit.to_dict(orient="records")

}

nx.set_node_attributes(G, attrs, name='ml_features')

Basic ML Principles#

Machine learning basically involves building algorithms that can learn things from data and make generalizations to new data. Supervised machine learning is the practice of building a model when we have training data – the information that we use to inform our model how the world works – that has correct ground truth answers. When you’re building a linear regression or a Naive Bayes classifier (for example), you’re doing supervised machine learning.

In contrast, unsupervised machine learning (which we won’t be doing much of today) is useful when we don’t have access to exact ground truths about desired outcomes, but we have to build insights with our dataset anyway. When we do unsupervised ML, we figure out what patterns are present in unlabeled data. Clustering is one type of unsupervised machine learning technique; when we cluster a dataset, we’re learning to recognize similar kinds of data points. Generating new examples is also (kind of) an unsupervised ML task. Training GPT and its ilk to produce language requires massive amounts of scraped text that doesn’t have “answers” attached to it per se. Humans aren’t going through and labeling the terabytes of data that GPT is trained on (before it is fine-tuned for specific tasks). Instead, at a high level, GPT and friends are trained to produce text that we can’t tell comes from a computer – it should “look like” the text that showed up in the massive amounts of data it was trained on. Once GPT has been trained in this fashion, it is also fine-tuned for specific tasks – this is where human labeling and direction do come in.

Today, when we talk about doing machine learning we’re going to mostly focus on supervised ML, specifically binary classification. Binary classification involves learning to answer a yes-or-no question about new data points after optimizing a model’s parameters using a set of data points with established answers.

Train/Test Splits#

Training models requires training data and test data at minimum. Our training data is what the model learns patterns from, and the test data tells us how well the model does on data it hasn’t seen before.

If we were to test on the same data we trained on, what could potentially happen?

Spoiler: This is called overfitting. A lot of the time, when we train a model we’re worried about this happening. Overfitting happens when your model learns the data it’s trained on too well – it’s too accustomed to the training data and doesn’t generalize well to new data. We might have too many parameters in our model, such that it’s able to memorize the quirks of the training set too well, or we might’ve selected our training set improperly.

I once saw an example from a talk about explainability where scientists trained a model to guess where a Google StreetView photo was taken; it did really well on images taken at the same time as other images in the training set and really poorly on images taken at a later date. Turns out that the model was memorizing how windshield smudges changed over time and correlated the state of the windshield smudges to the car’s location. So when the smudges changed (but the landscape didn’t), the model was not able to identify the locations anymore.

When we construct training and testing sets, we need to make sure the data that we use for training has similar patterns to the test data while keeping the training and testing sets strictly separate from each other. We usually accomplish this by randomly splitting the dataset into two distinct parts. In this case, we’ll be using sklearn, also known as scikit-learn, which is a general-purpose machine learning library for Python. sklearn has a handy function called train_test_split that we can use to generate a training set and test set that are randomly selected. We can also set the random_seed parameter to be a fixed number; this will ensure that we get the same train/test split every time.

from sklearn.model_selection import train_test_split

df_attrs_explicit = df_attrs_explicit.fillna(0.0)

explicit_train, explicit_test = train_test_split(df_attrs_explicit, test_size=0.3, random_state=42)

print('The training set length is', len(explicit_train), 'and the test set length is', len(explicit_test))

The training set length is 6648 and the test set length is 2850

Measuring How Well We Do#

The next thing we have to think about, once we’ve trained our model, is evaluating how well it performs on held-out data (i.e. the test set). For a binary classifier, we can define four kinds of events that might occur:

True Value: 1 |

True Value: 0 |

|

|---|---|---|

Predicted Value: 1 |

True Positive (TP) |

False Positive (FP) |

Predicted Value: 0 |

False Negative (FN) |

True Negative (TN) |

There are a few ways we can measure how well a binary classifier does, and sometimes we need to look at several measures in order to get a full picture of itss performance.

Measure |

Definition |

Formula |

|---|---|---|

Accuracy |

How often do we get it right? |

\(\frac{TP + TN}{TP + FP + TN + FN}\) |

Recall |

How many true positives do we detect out of all positive examples? |

\(\frac{TP}{TP + FN}\) |

Precision |

How many of our positive classifications are truly positive? |

\(\frac{TP}{TP + FP}\) |

F1 Score |

Harmonic mean of precision and recall |

\(\frac{2TP}{2TP + FP + FN}\) |

Let’s try a toy example using the dataframe with user attributes and explicit language usage. sklearn has a lot of classifiers; right now we’re going to try using a random forest classifier. Random forest classifiers consist of many decision tree classifiers, each of which is trained on a different subset of the training dataset. Broadly speaking, decision tree classifiers learn decision rules from the data; they can be very complicated, but you can also force them to limit their breadth or depth. The average prediction output by all the decision trees for a particular data point is the random forest classifier’s final output.

from sklearn.ensemble import RandomForestClassifier

TRAIN_COLS = set(['days', 'views', 'partner'])

explicit_train_x = explicit_train[[c for c in explicit_train.columns if c in TRAIN_COLS]].to_numpy()

explicit_test_x = explicit_test[[c for c in explicit_test.columns if c in TRAIN_COLS]].to_numpy()

explicit_train_y = explicit_train['mature'].to_numpy()

explicit_test_y = explicit_test['mature'].to_numpy()

clf = RandomForestClassifier(criterion='log_loss')

clf.fit(explicit_train_x, explicit_train_y.ravel())

preds = clf.predict(explicit_test_x)

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, confusion_matrix

import matplotlib.pyplot as plt

print('accuracy score:', accuracy_score(explicit_test_y, preds))

print('precision:', precision_score(explicit_test_y, preds))

print('recall:', recall_score(explicit_test_y, preds))

print('F1:', f1_score(explicit_test_y, preds))

tn, fp, fn, tp = confusion_matrix(explicit_test_y, preds).ravel().tolist()

print()

print('Rate of True Negatives Correctly Identified:', tn / (tn + fp))

print('False Positives Out of All Negative Labels (false alarm rate):', fp / (fp + tn))

print('False Negatives Out of All Positive Labels (failure to detect):', fn / (fn + tp))

accuracy score: 0.5835087719298245

precision: 0.6476906552094522

recall: 0.694300518134715

F1: 0.6701861628230064

Rate of True Negatives Correctly Identified: 0.4106019766397125

False Positives Out of All Negative Labels (false alarm rate): 0.5893980233602875

False Negatives Out of All Positive Labels (failure to detect): 0.30569948186528495

Hyperparameters#

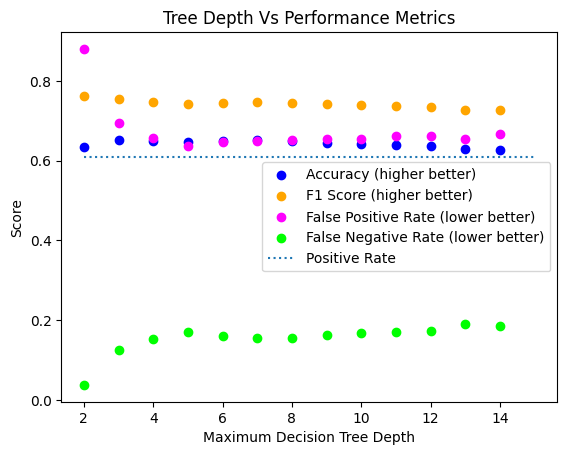

Let’s see if we can tweak our decision tree classifier a bit. When we talk about hyperparameters in machine learning, we’re referring to the parameters that govern a model’s behavior, not the parameters that the model learns itself in order to fit the data. For a decision tree, we might control its depth or the maximum number of leaf nodes it’s allowed to make. When we are working with a classifier to figure out the best hyperparameters, we still don’t want to use our test set to see how well we’re doing when we change hyperparameters. Instead, we’ll add another split to the dataset, known as the validation set. We use the validation set to see how well we perform on data we didn’t see in training; this lets us tune our hyperparameters effectively before we see how well our final model performs on the test set.

When we’re tweaking a model’s hyperparameters, we usually split our data into 3 sets – training, validation, and test. We use our training set to train the model; the validation set is used to figure out which choice of hyperparameters (like learning rate, optimizer, network size, etc.) works best for the dataset & model; and the testing set is for figuring out how well the finalized model does. The model never trains on the validation or testing data - it only updates itself based on the training data. A good rule of thumb is to use 10-15% of data for validation, 15-20% of the data for testing, and the rest for training. You should randomly split up your data such that the training, testing, and validation sets have similar distributions.

import numpy as np

explicit_train, explicit_val = train_test_split(df_attrs_explicit, test_size=0.3, random_state=42)

explicit_train_x = explicit_train[[c for c in explicit_train.columns if c in TRAIN_COLS]].to_numpy()

explicit_val_x = explicit_val[[c for c in explicit_val.columns if c in TRAIN_COLS]].to_numpy()

explicit_train_y = explicit_train['mature'].to_numpy()

explicit_val_y = explicit_val['mature'].to_numpy()

accuracies = []

f1s = []

false_alarms = []

failed_detections = []

for depth in range(2, 15):

clf = RandomForestClassifier(max_depth=depth)

clf.fit(explicit_train_x, explicit_train_y.ravel())

preds = clf.predict(explicit_val_x)

accuracies.append(accuracy_score(explicit_val_y, preds))

f1s.append(f1_score(explicit_val_y, preds))

tn, fp, fn, tp = confusion_matrix(explicit_val_y, preds).ravel().tolist()

false_alarms.append(fp / (fp + tn))

failed_detections.append(fn / (fn + tp))

plt.scatter([i for i in range(2, 15)], accuracies, color='blue', label='Accuracy (higher better)')

plt.scatter([i for i in range(2, 15)], f1s, color='orange', label='F1 Score (higher better)')

plt.scatter([i for i in range(2, 15)], false_alarms, color='magenta', label='False Positive Rate (lower better)')

plt.scatter([i for i in range(2, 15)], failed_detections, color='lime', label='False Negative Rate (lower better)')

plt.plot([2, 15], [np.mean(explicit_val_y), np.mean(explicit_val_y)], linestyle=':', label='Positive Rate')

plt.legend()

plt.title('Tree Depth Vs Performance Metrics')

plt.xlabel('Maximum Decision Tree Depth')

plt.ylabel('Score')

Text(0, 0.5, 'Score')

As you can see, we are doing only marginally better than random guessing right now.

What does that mean about how useful our classifier is?

Let’s see what happens when we incorporate information about the network into our classification.

Collective Classification#

When we do collective classification, we have two variables: a graph \(G\) and a node \(v \in G\) that we want to label (classify). Some of \(v\)’s neighbors are labeled, and some are not. In this setting, we can classify \(v\) based on any subset of the following:

The correlation between \(v\)’s label and its observed attributes (this is what we just tried!)

The correlation between \(v\)’s label and its neighbors’ known labels and observed attributes

The correlation between \(v\)’s label and its unlabeled neighbors’ unknown labels.

A lot of the collective classification methods discussed here (paper coauthored by our very own Dr. Tina Eliassi-Rad) involve horrifying amounts of statistical inference, which is beyond the scope of this class. However, one of the methods discussed there, iterative classification, is fairly tractable. Let’s take a look!

Iterative Classification#

First, you choose a local classifier, \(f\), that can be used to determine the label of a node given the labels (seen & unseen) of its neighbors. Tina calls this “guilt by association”. Your local classifier \(f\) can give you a label outright, or it can give you a probability distribution over labels (in which case you pick the label that gives you the best value). Since real-world networks notably do not have fixed degree, but most classifiers assume fixed-length feature vectors, we can’t just feed an arbitrary-length vector into any function \(f\). Instead, we have to come up with some sort of pooling function, like MAX, COUNT, MEAN, SUM, etc. and pool the labels in some pre-determined way.

We then move on to the actual labeling business. First, we do a “bootstrapping” step – we label each node using our incomplete information, giving it our best guess. Next, we do the iterative classification process. Each time, we generate a new ordering O of nodes, then we go through and label each node according to what its neighbors are currently labeled as, using \(f\). We do this a bunch of times until the labels stop changing or we’ve gone through a pre-determined number of iterations, after which we should give up.

explicit_train_ic, explicit_test_ic = train_test_split(df_attrs_explicit, test_size=0.5, random_state=42)

map_train = {user: mature for user, mature in zip(explicit_train_ic['new_id'], explicit_train_ic['mature'])}

map_test = {user: mature for user, mature in zip(explicit_test_ic['new_id'], explicit_test_ic['mature'])}

def local_classifier(neighbor_labels):

"""

Given a list of neighbor labels, return True if there are at least 4 True values in the list.

"""

return np.sum(neighbor_labels) >= 4

def iterative_classification(G, map_train, map_test, local_classifier=local_classifier, max_iter=20):

"""

Perform the iterative classification algorithm on the network G given split train and test sets.

Inputs:

G: networkx graph object; should have the same nodes as the keys in map_train and map_test combined.

map_train and map_test: dicts; mappings from the nodes in the train & test sets to their binary classification ground truth.

local_classifier: function that takes in a list of boolean values and returns a single boolean value

max_iter: denotes how many iterations to do before stopping (if no convergence occurs).

Returns:

labels: mapping over the entire dataset (with labels from map_train preserved) from nodes to their binary classification.

"""

labels = {v: 0 for v in map_test.keys()}

for node in map_test.keys():

neighbors = G.neighbors(node)

neighbor_labels = [map_train[n] for n in neighbors if n in map_train]

labels[node] = local_classifier(neighbor_labels)

labels = labels | map_train

n_iter = 0

nodes_list = [k for k in map_test.keys()]

while n_iter < max_iter:

prev_labels = labels.copy()

np.random.shuffle(nodes_list)

for node in nodes_list:

neighbors = G.neighbors(node)

neighbor_labels = [prev_labels[n] for n in neighbors]

labels[node] = local_classifier(neighbor_labels)

if labels == prev_labels:

return labels

n_iter += 1

return labels

labels = iterative_classification(G, map_train, map_test, local_classifier=local_classifier, max_iter=20)

ground_truth = []

preds = []

for k, v in map_test.items():

preds.append(labels[k])

ground_truth.append(v)

print('accuracy score:', accuracy_score(ground_truth, preds))

print('precision:', precision_score(ground_truth, preds))

print('recall:', recall_score(ground_truth, preds))

print('F1:', f1_score(ground_truth, preds))

tn, fp, fn, tp = confusion_matrix(ground_truth, preds).ravel().tolist()

print()

print('Rate of True Negatives Correctly Identified:', tn / (tn + fp))

print('False Positives Out of All Negative Labels (false alarm rate):', fp / (fp + tn))

print('False Negatives Out of All Positive Labels (failure to detect):', fn / (fn + tp))

accuracy score: 0.6390819119814698

precision: 0.6576116191500807

recall: 0.8471933471933472

F1: 0.7404603270745003

Rate of True Negatives Correctly Identified: 0.3166935050993022

False Positives Out of All Negative Labels (false alarm rate): 0.6833064949006978

False Negatives Out of All Positive Labels (failure to detect): 0.15280665280665282

Your Turn!#

Can you toy with the local_classifier function to produce better performance with this classification algorithm?

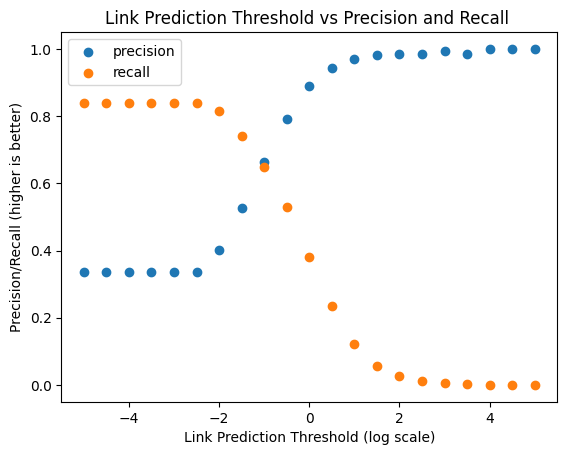

Link Prediction#

Next, we’re going to learn about predicting the existence of missing links (or links that will be formed at the next time step) in a graph, also known as link prediction. For a more comprehensive overview of link prediction, check out this survey article by Martinez et al., which this section draws from quite a bit. Many link prediction tasks are formulated as a ranking problem, where all pairs of nodes that aren’t connected yet get a score that is proportional to the likelihood of a link forming between them. Then we usually choose a threshold, above which we predict a link will exist. We can also view link prediction as a binary classification problem; today we will explore both points of view.

Similarity-Based Methods#

Homophily refers to the tendency of similar nodes to be connected to each other. Not all networks are completely homophilous on all dimensions (think dating networks, for example), but many are. Similarity-based methods operate on the assumption that the more similar a pair of nodes is, the more likely they are to be connected to each other. We can define similarity in many different ways, but in general, we choose a similarity measure, rank candidate node pairs by their similarity, and then pick a threshold above which we say we will predict a link exists.

A Limited Whirlwind Tour of Similarity Measures#

In this table, we’ve laid out some of the more commonly used similarity measures. Some of them are based on local structural information about a node’s neighborhood (“Local”); others use global approaches, usually involving paths between two nodes or random walks, to determine similarity (“Global”); there are also approaches that mix the two, considering more topological information than strictly local measures while still being relatively fast to compute (“Quasi-Local”. Today we’ll focus on local measures of similarity; for a node pair \((u, v)\), we will define the similarity for a number of measures.

Similarity Measure |

Definition |

Formula |

|---|---|---|

Common Neighbors |

How many neighbors do \(u\) and \(v\) have in common? |

$ |

Adamic-Adar Index |

Common neighbors but normalized by neighbors’ degree |

$\sum\limits_{w \in N(u) \cap N(v)} \frac{1}{ |

Preferential Attachment Index |

Product of nodes’ number of neighbors |

$ |

Jaccard Index |

Ratio of shared neighbors to total number of neighbors |

$\frac{ |

What are some other ways we could measure similarity/proximity in the network, perhaps looking at paths between nodes?

import itertools

import random

# make a train/test split of edges in the graph; we'll remove 1/4 of all edges to start.

edges = [e for e in G.edges]

edges_train, edges_test = train_test_split(edges, test_size=0.25, random_state=42)

# get nodes that don't show up in the training set

nodes_in_train = set([node for pair in edges_train for node in pair])

nodes_in_test = set([node for pair in edges_test for node in pair])

node_not_in_G = nodes_in_test - nodes_in_train

# construct a graph with just the known (i.e. training) edges

G_train = nx.from_edgelist(edges_train)

# make sure we have all the nodes in the original graph in case we're missing some in the set of training edges.

G_train.add_nodes_from(list(node_not_in_G))

def generate_sample_negative_edges(G, edges_test, multiplier=2.1):

"""

Given a graph G and a test set of edges that will be predicted,

produce a list of edges that don't exist in G OR in the test set.

Parameters:

G: networkx graph object; contains only edges from a training set (i.e. it's a masked version of an original graph)

edges_test: the edges missing from the original graph that are not present in G

multiplier: very approximately multiply the number of test edges by this to express the desired quantity of negative examples.

Returns:

list of candidate edges that don't exist in G OR in edges_test

(negative examples for a link prediction strategy to be evaluated on).

"""

nodes = [n for n in G.nodes]

edge_set = set([e for e in G.edges]).union(set(edges_test))

combos = [c for c in itertools.combinations(nodes, 2)]

candidates = random.sample(combos, int(len(edges_train) * multiplier))

return [c for c in candidates if c not in edge_set]

# make some fake edges

candidate_edges = generate_sample_negative_edges(G_train, edges_test)

# run adamic adar index on a limited subset of negative & positive candidate edges

similarities = nx.adamic_adar_index(G_train, candidate_edges + edges_test)

# rank edges by their similarity scores

similarities = sorted(similarities, key=lambda b: b[2], reverse=True)

log_max_similarity = np.log(int(max([s[2] for s in similarities]))) # similarity is roughly distributed on a log scale

set_edges_test = set(edges_test)

# evaluating precision & recall at different threshold values

precisions = []

recalls = []

for threshold in np.arange(-5, log_max_similarity, 0.5):

# edges w/ similarity above the assigned threshold; contains all true & false positives

edges_above = set([(s[0], s[1]) for s in similarities if s[2] > np.exp(threshold)])

# edges w/ similarity at or below the assigned threshold; contains all true & false negatives

edges_below = set([(s[0], s[1]) for s in similarities if s[2] <= np.exp(threshold)])

true_pos = len(set_edges_test.intersection(edges_above)) # number of true positives

false_neg = len(set_edges_test.intersection(edges_below)) # number of false negatives

precisions.append(true_pos / len(edges_above))

recalls.append(true_pos / (true_pos + false_neg))

# plots precision and recall at various thresholds

plt.title('Link Prediction Threshold vs Precision and Recall ')

plt.scatter(np.arange(-5, log_max_similarity, 0.5), precisions, label='precision')

plt.scatter(np.arange(-5, log_max_similarity, 0.5), recalls, label='recall')

plt.legend()

plt.xlabel('Link Prediction Threshold (log scale)')

plt.ylabel('Precision/Recall (higher is better)')

Text(0, 0.5, 'Precision/Recall (higher is better)')

For Discussion:#

Based on this plot, what would you choose as the approximate threshold? Why?

Why do you think we see the trends that we see (high threshold = terrible recall; low threshold = bad precision)?

Would this task get harder if we looked at all possible links (i.e. all links that didn’t exist in the training graph)?

Classifier-Based Methods#

We can also use binary classifiers for link prediction. In this case, we treat our examples more independently (i.e. without ranking). We can use node features, information about network topology, and even the similarity measures we just used. Discussing the various binary classifiers that exist is beyond the scope of this lesson, but we will try out a random forest classifier on our dataset that incorporates the 4 similarity measures we just discussed.

# make some fake edges

candidate_edges_train = generate_sample_negative_edges(G_train, edges_test)

candidate_edges_test = generate_sample_negative_edges(G_train, edges_test + candidate_edges_train)

# create similarity measures for training data

adamic_adar_train = nx.adamic_adar_index(G_train, candidate_edges_train + edges_train)

jaccard_train = nx.jaccard_coefficient(G_train, candidate_edges_train + edges_train)

pref_attachment_train = nx.preferential_attachment(G_train, candidate_edges_train + edges_train)

common_neighbors_train = [(e[0], e[1], len(nx.common_neighbors(G_train, e[0], e[1]))) for e in candidate_edges_train + edges_train]

# create similarity measures for test data

adamic_adar_test = nx.adamic_adar_index(G_train, candidate_edges_test + edges_test)

jaccard_test = nx.jaccard_coefficient(G_train, candidate_edges_test + edges_test)

pref_attachment_test = nx.preferential_attachment(G_train, candidate_edges_test + edges_test)

common_neighbors_test = [(e[0], e[1], len(nx.common_neighbors(G_train, e[0], e[1]))) for e in candidate_edges_test + edges_test]

# build numpy matrices of the similarity measures

x_train = np.array(

[[t[-1] for t in arr]

for arr in [adamic_adar_train, jaccard_train, pref_attachment_train, common_neighbors_train]

]

)

y_train = np.hstack((np.zeros(len(candidate_edges_train)), np.ones(len(edges_train))))

x_test = np.array(

[[t[-1] for t in arr]

for arr in [adamic_adar_test, jaccard_test, pref_attachment_test, common_neighbors_test]

]

)

y_test = np.hstack((np.zeros(len(candidate_edges_test)), np.ones(len(edges_test))))

# create a random forest classifier; fit it to the training data & predict for the test data.

clf = RandomForestClassifier(criterion='log_loss')

clf.fit(x_train.T, y_train)

preds = clf.predict(x_test.T)

Briefly, how well did we do?

print('accuracy score:', accuracy_score(y_test, preds))

print('precision:', precision_score(y_test, preds))

print('recall:', recall_score(y_test, preds))

print('F1:', f1_score(y_test, preds))

accuracy score: 0.9088524212089107

precision: 0.6440659170049017

recall: 0.7584954943189238

F1: 0.6966127716739433

Freeform Experimentation!#

For the remainder of the class period, pick a question you’d like to answer with respect to link prediction, and write code to answer it.

Here are some examples:

How does classifier performance change as the ratio of negative to positive examples increases?

Which kinds of classifiers in the sklearn binary classification pantheon perform better for link prediction?

What happens if we add in new similarity measures to the code from the previous example? What if we remove some similarity measures?

What if we drastically change the prevalence of negative examples in the training set relative to the prevalence in the test set?

# Your Turn!

Next time…#

Introduction to Machine Learning 2 — Graph ML class_15_graph_ml_2

References and further resources:#

Class Webpages

Jupyter Book: https://network-science-data-and-models.github.io/phys7332_fa25/README.html

Github: https://github.com/network-science-data-and-models/phys7332_fa25/

Syllabus and course details: https://brennanklein.com/phys7332-fall25